Few business and technology trends have accelerated as rapidly or as forcefully as artificial intelligence. Digital transformation, cloud computing, and cybersecurity have each reshaped industries.

But AI is different. Its appetite for data, processing power, and speed is unprecedented. It is now disrupting not only software and computing architectures, but also one of the least visible yet most critical enablers of the digital economy: power.

AI’s rapid growth has triggered an energy demand surge unlike anything the data-center sector has experienced. Power is no longer a background requirement; it’s a competitive bottleneck, shaping strategy, constraining deployment, and deciding which companies can scale their AI ambitions. The assumptions that guided data-center power design for decades no longer hold.

“AI scaling laws are driving massive compute demand by predictably unlocking better AI outcomes,” says James Feasey, Senior Sales Director at Edged Infrastructure . “But grid limits and stretched power infrastructure are slowing speed to market, just as the race to scale intensifies.”

Power Quantity: A New Era of Supply and Demand Imbalance

As AI training tasks drive ever-larger compute clusters, data-center power demand has jumped from megawatts to gigawatts. This leap has created an unprecedented imbalance in supply and demand and years-long backlogs for grid connections.

In an industry where speed to market can determine market leadership, waiting years for grid access is not an option. Power constraints now dictate where facilities can be built and how quickly cloud providers can expand. A delayed facility doesn't just lose time; it forfeits market opportunity at a moment when AI competition is fierce and global.

As a result, cloud companies are racing toward behind-the-meter strategies: building temporary power plants to sidestep grid bottlenecks in what The Wall Street Journal calls the “bring your own power” boom. These systems, constructed with natural gas industrial turbines and reciprocating engines, can be operational within months rather than years, providing predictability and acceleration in a market where both are critical.

But these bridging plants come with significant drawbacks. They are long-lead items with fragile supply chains, slow to deploy, and complex to scale. Once utilities deliver grid capacity, much of that investment becomes stranded, leaving companies with expensive infrastructure they no longer need. Levelized cost per kilowatt-hour soars, turning a short-term workaround into a long-term liability.

The challenges don’t end there. Even where power is available, AI introduces a second problem with profound implications: power quality.

AI’s Volatile Load Pattern

Unlike traditional cloud workloads—which involve millions of uncorrelated, steady tasks—AI training jobs generate highly synchronized compute bursts across tens of thousands of processors. Unlike conventional workloads with smooth, predictable power profiles, AI workloads produce rapid, large-scale fluctuations in demand as GPUs execute identical operations in parallel.

These abrupt transitions between idle and peak states can swing consumption by tens of megawatts in milliseconds. Such highly dynamic load profiles place extreme stress on electrical systems, inducing voltage sags, frequency deviations, and transient instability that can reduce equipment lifespan and trigger outages.

This isn’t a theoretical concern. Power grids were not designed to accommodate near- instantaneous demand spikes of this magnitude. In the most extreme cases, AI power swings could take systems offline or even cause regional blackouts—exactly the opposite of what hyperscaled digital infrastructure is meant to enable.

The Stopgap Response—and Why It Won’t Scale

To stabilize behind-the-meter conditions cloud providers have resorted to assembling highly engineered, bespoke power stacks—combinations of industrial turbines, natural gas reciprocating engines, and large BESS systems from multiple vendors, integrated into complex, site-specific architectures.

While this approach works in the near term, it suffers from two structural flaws:

1. Complexity introduces fragility. Multiple vendor systems, diverse equipment footprints, and long supply chains can create more failure points, higher operational risk, and maintenance challenges.

2. Baked-in obsolescence. On-site power plants are designed for today’s known specifications—capacity, voltage, and rack density—even though AI power requirements can shift with nearly every product cycle. By the time systems go online, workload needs may have outgrown the infrastructure beneath them.

Cloud providers face a complex challenge: scaling AI infrastructure quickly without reliable utility power, while maintaining the flexibility to adapt to relentless innovation and protect long-term returns.

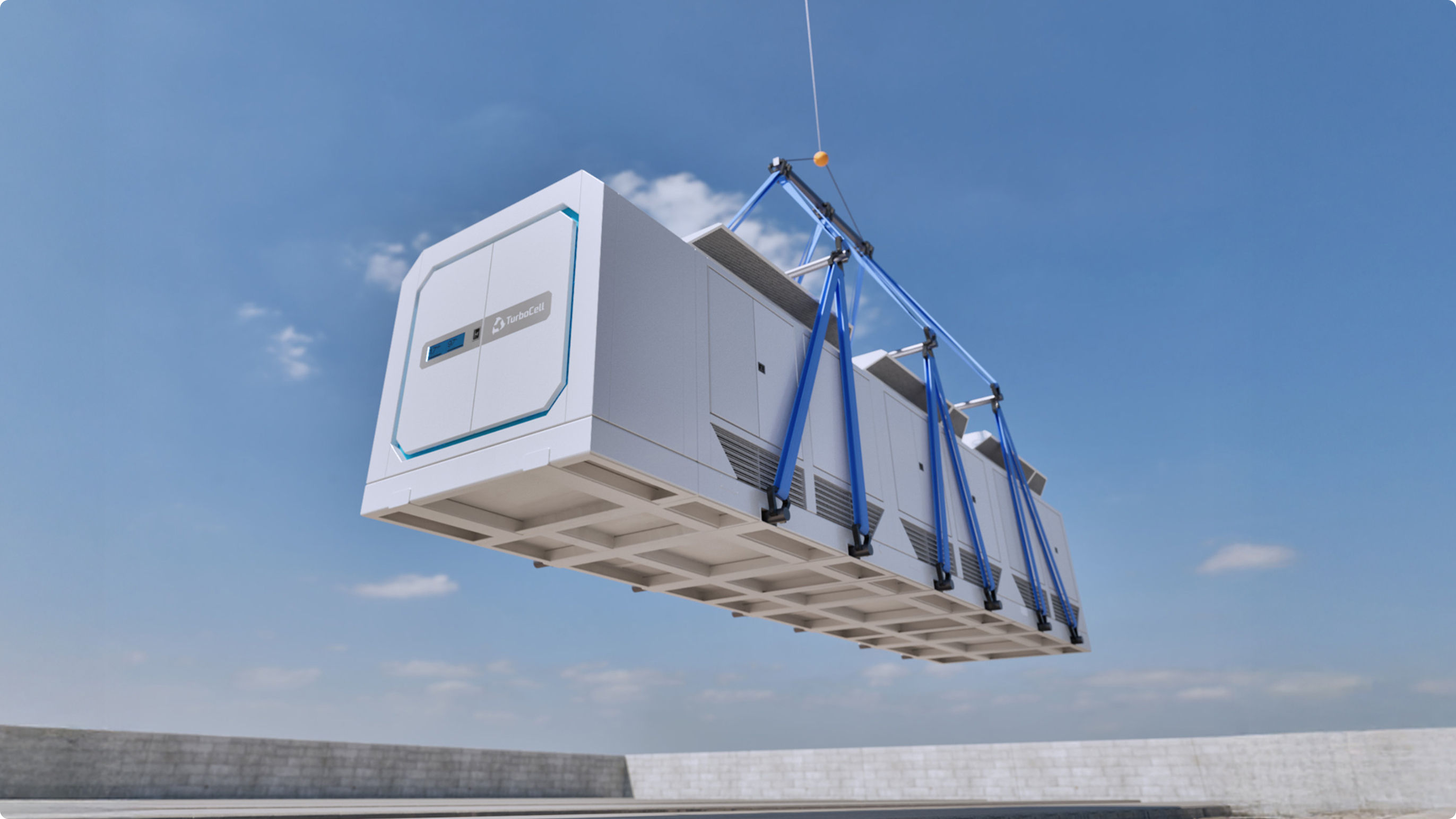

A New Class of Solution: Dual-Use Generator Systems

Emerging dual-use generator designs offer a more elegant, future-proof solution. Rather than being functionally limited to bridging or backup power, these systems combine both capabilities into a single architecture engineered for the full AI infrastructure lifecycle.

These dual-use systems:

- provide continuous bridging power to bypass grid delays;

- deliver ultra-fast backup response to manage AI step-load changes;

- simplify electrical one-lines, reducing points of failure and operational expense;

- preserve long-term value, avoiding stranded assets once grid interconnection arrives; and

- Seamlessly transition from day-1 prime power into backup or demand-response operations

- fit into smaller, more efficient footprints than legacy systems.

By delivering a single system capable of high-efficiency, ultra-low-emission baseload power, along with fast transient response and robust block-loading performance, dual-use designs streamline on-site generation and maximize long-term operational flexibility. When this high-performance generation is closely coupled with a battery in a hybrid DC architecture, it can absorb and actively stabilize volatile AI loads at the source. This approach prevents damaging electrical disturbances from propagating into GPU power delivery systems, on-site generation equipment, and, ultimately, the utility grid after interconnection.

Unlike traditional systems, these dual-use platforms create standardized modular building blocks, enabling rapid deployment closely matched to IT demand. This approach supports just-in-time power planning instead of requiring massive upfront investments “Decoupling power generation from building planning allows on-demand scaling, reducing the lag between large capital investments and revenue” says Feasey.

These multi-function on-site generation assets don’t just solve short-term problems—they provide a platform that redefines the role of data centers within the broader grid ecosystem. Large, flexible loads could eventually help utilities manage demand-response cycles, stabilize grid volatility, and reduce cost pressures for all customers. In this way, data centers could become an asset, rather than a liability, to local grid communities.

As Chris Ellis, CEO of Edged Infrastructure, notes, two changes alone—behind-the-meter generator adoption and seamless grid-to-onsite power controls—could “shift the perception of data centers’ effect on the utility grid 180 degrees.”

Flexibility Is the New Efficiency

For years, data-center success was measured by power usage effectiveness, a metric known as PUE. In the AI era, efficiency is no longer enough. Adaptability—power that can scale, shift, and stabilize—is now the competitive differentiator.

A data center that cannot scale power quickly cannot scale revenue. One that cannot stabilize workloads cannot operate reliably. And one that locks capital into obsolete infrastructure—or ignores its role within the broader utility grid—cannot remain competitive.

Companies need power strategies that work as permanent infrastructure, not temporary workarounds, and dual-use systems provide exactly that.

The Path Forward

AI scaling laws continue to drive sustained investment in gigawatt-scale infrastructure, with each new processor generation demanding more power than the last. Cloud providers are racing to deploy AI-compute and the cost of falling behind increases daily. Data centers designed just two years ago are already undersized.

The companies that succeed will be those that view power not as a commodity but as a strategic capability—one that must evolve in lockstep with AI itself. Those who treat power as an afterthought may find their ambitions stranded not by software, talent, or capital, but by something far more fundamental: electricity.