AI is rewriting the rules for everything, including the infrastructure behind it. A single AI-focused data center already consumes the electricity of a mid-sized city. Now imagine future campuses 20 times larger, drawing as much power as the entire state of New York. That surge in energy demand comes with an equally staggering need for cooling.

Why? AI processing units pack as much performance into a single chip as possible.They run significantly hotter than traditional processors. And modern data centers pack thousands of them together, magnifying power use at the server rack by almost 100 times, and generating extreme, concentrated heat. To cool it all, most operators today still rely on direct evaporative cooling systems that use enormous volumes of water to keep equipment within safe operating temperatures.

But the math is no longer working. At current growth rates, data centers could soon consume nearly a trillion gallons of water per year within a decade.1

Even worse: Bloomberg found that two-thirds of new data centers since 2022 are being built in areas already under high water stress. Many are lured to places like Arizona, Nevada, Texas and Spain by lower land costs, attractive tax incentives, and increased access to low-cost energy. But these regions are also drying out—and residents and lawmakers are noticing. Some U.S. states and European countries courting data centers are simultaneously moving to regulate their water withdrawals.

The industry has reached a turning point. To keep AI scaling, operators must decouple cooling from water consumption.

Why Evaporative Cooling Is Becoming a Liability

Operators are experimenting with ways to reduce the impact of their evaporative systems—switching to greywater, optimizing existing equipment, or offsetting usage through “water-positive” projects.

But none of these fixes solve the core issue.

- Greywater isn’t a free pass: t’s often full of microbes, sediment, and corrosive materials that increase maintenance costs and operational complexity that is significantly magnified at the gigawatt scale of today’s AI facilities.

- Efficiency gains hit a wall: The most advanced data center operators have pushed fleet-wide average Water Usage Effectiveness (WUE) of evaporative systems to as low as 0.15 liters per kilowatt-hour—an engineering feat. But when AI workloads scale into the hundreds of terawatt-hours, even these relatively small per-unit water footprints translate into massive total withdrawals

- Offsets don’t calm local concerns: Water impacts are hyper-local. A replenishment project that is miles away rarely satisfies communities living next to a massive scale facility in drought-prone areas.

- Evaporative cooling systems are more expensive than closed-loop systems: Across manufacturing, planning, permitting, and operations, closed-loop, non-evaporative systems are easier to design, faster to install, and offer better long-term value

- Most operators are underpricing water risk: Water is still undervalued in many regions. When Microsoft applied EcoLab’s Water Risk Monetizer to its San Antonio facility, it found the real cost of water was 11 times higher than what showed up on its bill.

Taken together, evaporative cooling introduces unnecessary operational, regulatory, and reputational risks for AI cloud providers, where speed to compute is critical in a highly competitive market

What Next-Gen Cooling Must Deliver

As AI cloud providers evaluate future-proof options, any next-generation cooling solution must answer:

- Will it support today’s GPU thermal loads?

- Will it scale to tomorrow’s ultra-dense racks with more accelerators and higher aggregate heat?

- Can it be deployed anywhere—not just where water is abundant?

- Can it match the Power Usage Effectiveness (PUE) of best-in-class evaporative systems?

- Can it integrate seamlessly into existing data center infrastructure?

- Does it speed time to compute and mitigate long-term operational and reputational risk?

Waterless cooling has evolved, with a new class of systems purpose-built for the future of AI

Through ThermalWorks, closed-loop waterless cooling delivers industry-leading PUEs without compromise. Edged, part of Endeavour, became the first platform to deploy ThermalWorks at gigawatt scale across its global network, helping maximize tokens-per-watt of AI workloads.

Through key innovations and OEM co-design, ThermalWorks has eliminated the performance gap to leading evaporative systems, including:

- High-efficiency microchannel coils: Transfer heat more effectively in a smaller footprint, reducing energy use

- Ultra-efficient variable-speed compressors: Precisely control when mechanical cooling activates, minimizing unnecessary power consumption.

- Built-in economizers: Capture and repurpose waste heat at up to 140°F, turning a byproduct into a resource.

- Optimizing free cooling: Harness the natural natural flow of heat to cooler ambient environments, reducing compressor run time through ‘free’ and partial free cooling.

Liquid-to-Chip Cooling Pushes the Industry Forward

Leading semiconductor and AI accelerator manufacturers are driving a fundamental shift in thermal design. Microscopic channels are now etched directly into chip surfaces, enabling liquid-to-chip cooling that brings coolant into direct contact with the hottest regions of the die. This approach enables:

- more precise temperature control

- higher allowable chip temperatures

- better performance without thermal throttling

This architectural shift pairs naturally with non-evaporative cooling systems. Legacy evaporative systems typically operate at a delta-T of approximately 20°F—the temperature difference between supply and return liquid. As AI accelerators increasingly operate above 130°F, these systems must recirculate coolant multiple times to reject heat, increasing energy consumption and reducing overall efficiency.

ThermalWorks systems can run Delta Ts up to 60°F, often cooling in a single pass, enabling far more free cooling—even in hot climates where evaporative systems struggle.

Why Waterless Cooling Unlocks New Geographies

With next-generation accelerators tolerating higher operating temperatures and enabling longer hours of free cooling, non-evaporative systems can operate more efficiently across a broader range of hot, dry regions—precisely where land is inexpensive and low-cost renewable energy is abundant.

Evaporative systems cannot take advantage of these locations without compounding local water risks, but waterless systems can.

Another advantage: deployment speed.

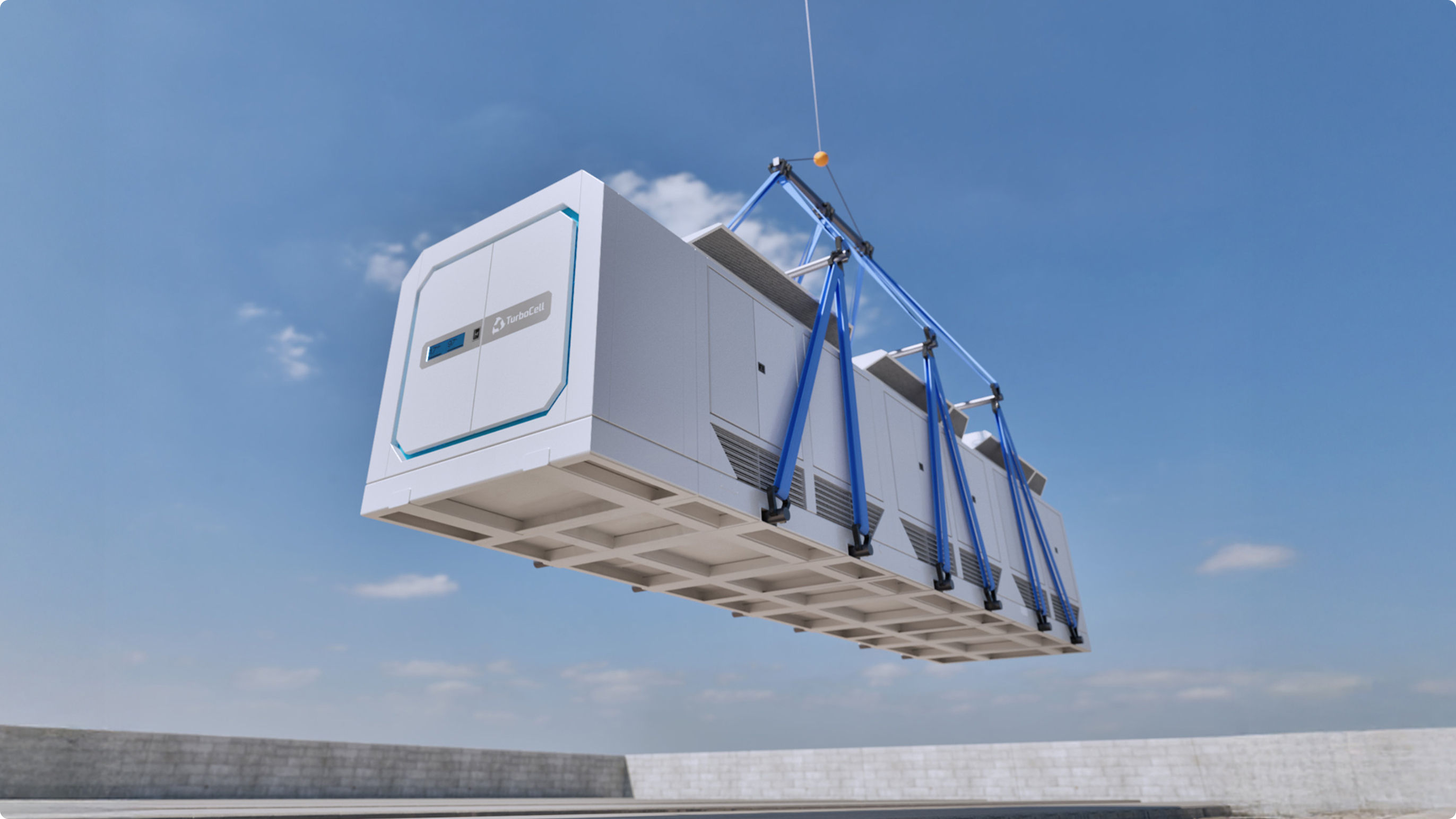

“The number one question we get from AI cloud providers is ‘how fast can I get capacity?’” says James Feasey, Senior Director of AI Infrastructure at Endeavour. Modular waterless systems can be deployed in weeks and scaled as needed—without the multi-year planning, permitting requirements and community resistance encountered by projects with large water dependencies.

ThermalWorks: Cooling Without Compromise

With water scarcity rising, regulatory pressure mounting, and workloads accelerating, the industry has reached a crossover point. As Feasey puts it, ‘Waterless cooling removes so many sources of friction that slowing the delivery of compute that it’s now the clear choice for next-generation AI infrastructure.’

Water shouldn’t constrain restrict AI’s growth. With ThermalWorks, operators can scale without compromise—and without water.

1 Based on current industry practices, direct data center water consumption could be as high as 875 billion gallons per year by 2036 and over 2 trillion gallons by 2040. Growth projections are based on Lawrence Berkeley National Lab estimates for U.S. data centers (‘high’ scenario) with growth curve extended to future years and expanded to account for non-U.S. share of global data center capacity.